CRISPR inspires new tricks to edit genes

Scientists usually shy away from using the word miracle — unless they’re talking about the gene-editing tool called CRISPR/Cas9. “You can do anything with CRISPR,” some say. Others just call it amazing.

CRISPR can quickly and efficiently manipulate virtually any gene in any plant or animal. In the four years since CRISPR has been around, researchers have used it to fix genetic diseases in animals, combat viruses, sterilize mosquitoes and prepare pig organs for human transplants. Most experts think that’s just the beginning. CRISPR’s powerful possibilities — even the controversial notions of creating “designer babies” and eradicating entire species — are stunning and sometimes frightening.

So far CRISPR’s biggest impact has been felt in basic biology labs around the world. The inexpensive, easy-to-use gene editor has made it possible for researchers to delve into fundamental mysteries of life in ways that had been difficult or impossible. Developmental biologist Robert Reed likens CRISPR to a computer mouse. “You can just point it at a place in the genome and you can do anything you want at that spot.”

Anything, that is, as long as it involves cutting DNA. CRISPR/Cas9 in its original incarnation is a homing device (the CRISPR part) that guides molecular scissors (the Cas9 enzyme) to a target section of DNA. Together, they work as a genetic-engineering cruise missile that disables or repairs a gene, or inserts something new where it cuts.

Even with all the genetic feats the CRISPR/Cas9 system can do, “there were shortcomings. There were things we wanted to do better,” says MIT molecular biologist Feng Zhang, one of the first scientists to wield the molecular scissors. From his earliest report in 2013 of using CRISPR/Cas9 to cut genes in human and mouse cells, Zhang has described ways to make the system work more precisely and efficiently.

He isn’t alone. A flurry of papers in the last three years have detailed improvements to the editor. Going even further, a bevy of scientists, including Zhang, have dreamed up ways to make CRISPR do a toolbox’s worth of jobs.

Turning CRISPR into a multitasker often starts with dulling the cutting-edge technology’s cutting edge. In many of its new adaptations, the “dead” Cas9 scissors can’t snip DNA. Broken scissors may sound useless, but scientists have upcycled them into chromosome painters, typo-correctors, gene activity stimulators and inhibitors and general genome tinkerers.

“The original Cas9 is like a Swiss army knife with only one application: It’s a knife,” says Gene Yeo, an RNA biologist at the University of California, San Diego. But Yeo and other researchers have bolted other proteins and chemicals to the dulled blades and transformed the knife into a multifunctional tool.

Zhang and colleagues are also exploring trading the Cas9 part of the system for other enzymes that might expand the types of manipulations scientists can perform on DNA and other molecules. With the expanded toolbox, researchers may have the power to pry open secrets of cancer and other diseases and answer new questions about biology.

Many enzymes can cut DNA; the first were discovered in the 1970s and helped to launch the whole field of genetic engineering. What makes CRISPR/Cas9 special is its precision. Scientists can make surgical slices in one selected spot, as opposed to the more scattershot approach of early tools. A few recent gene-editing technologies, such as zinc finger nucleases and TALENs, could also lock on to a single target. But those gene editors are hard to redirect. A scientist who wants to snip a new spot in the genome has to build a new editor. That’s like having to assemble a unique guided missile for every possible target on a map. With CRISPR/Cas9, that’s not necessary.

The secret to CRISPR’s flexibility is its guidance system. A short piece of RNA shepherds the Cas9 cutting enzyme to its DNA target. The “guide RNA” can home in on any place a researcher selects by chemically pairing with DNA’s information-containing building blocks, or bases (denoted by the letters A, T, C and G). Making a new guide RNA is easy; researchers often simply order one online by typing in the desired sequence of bases.

That guidance system is taking genetic engineers to places they’ve never been. “With CRISPR, literally overnight what had been the biggest frustration of my career turned into an undergraduate side project,” says Reed, of Cornell University. “It was incredible.”

Reed studies how patterns are painted on butterfly and moth wings. Color patterning is one of the fundamental questions evolutionary and developmental biologists have been trying to answer for decades. In 1994, Sean B. Carroll and colleagues discovered that a gene called Distal-less is turned on in butterfly wings in places where eyespots later form. The gene appeared to be needed for eyespot formation, but the evidence was only circumstantial. That’s where researchers have been stuck for 20 years, Reed says. They had no way to manipulate genes in butterfly wings to get more direct proof of the role of different genes in painting wing patterns.

With CRISPR/Cas9, Reed and Cornell colleague Linlin Zhang cut and disabled the Distal-less gene at an early stage of wing development and got an unexpected result: Rather than cause eyespots, Distal-less limits them. When CRISPR/Cas9 knocks out Distal-less, more and bigger eyespots appear, the researchers reported in June in Nature Communications. Reed and colleagues have snipped genes in not just one, but six different butterfly species using CRISPR, he says.

CRISPR cuts genes very well, maybe too well, says neuroscientist Marc Tessier-Lavigne of Rockefeller University in New York City. “The Cas9 enzyme is just so prolific. It cuts and recuts and recuts,” he says. That constant snipping can result in unwanted mutations in genes that researchers are editing or in genes that they never intended to touch. Tessier-Lavigne and colleagues figured out how to tame the overeager enzyme and keep it from julienning the genes of human stem cells grown in lab dishes. With better control, the researchers could make one or two mutations in two genes involved in early-onset Alzheimer’s disease, they reported in the May 5 Nature. Growing the mutated stem cells into brain cells showed that increasing the number of mutated copies of the genes also boosts production of the amyloid-beta peptide that forms plaques in Alzheimer’s-afflicted brains. The technology could make stem cells better mimics of human diseases.

While Tessier-Lavigne and others are working to improve the CRISPR/Cas9 system, building better guide RNAs and increasing the specificity of its cuts, some researchers are turning away from snippy Cas9 altogether.

Nuanced edits

Cas9 isn’t entirely to blame for the mess created when it causes a double-stranded break by slicing through both rails of the DNA ladder. “The cell’s response to double-stranded breaks is the source of a lot of problems,” says David Liu, a chemical biologist at Harvard University. A cell’s go-to method for fixing a DNA breach is to glue the cut ends back together. But often a few bases are missing or bits get stuck where they don’t belong. The result is more genome “vandalism than editing,” Liu says, quoting Harvard colleague George Church.

Liu wanted a gene editor that wouldn’t cause any destructive breaches: One that could A) go to a specific site in a gene and B) change a particular DNA base there, all without cutting DNA. The tool didn’t exist, but in Cas9, Liu and colleagues saw the makings of one, if they could tweak it just a bit.

They started by dulling Cas9’s cutting edge, effectively killing the enzyme. The “dead” Cas9 could still grip the guide RNA and ride it to its destination, but it couldn’t slice through DNA’s double strands. Liu and colleagues then attached a hitchhiking enzyme, whose job is to initiate a series of steps to change the DNA base C into a T, or a G to an A. The researchers had to tinker with the system in other ways to get the change to stick. Once they worked out the kinks, they could make permanent single base-pair changes in 15 to 75 percent of the DNA they targeted without introducing insertions and deletions the way traditional CRISPR editing often does. Liu and collaborators reported the accomplishment in Nature in May. A similar base editor, reported in Science in August by researchers in Japan, may be useful for editing DNA in bacteria and other organisms that can’t tolerate having their DNA cut.

There are 12 possible combinations of DNA base swaps. The hitchhiking enzyme that Liu used, cytidine deaminase, can make two of the swaps. Liu and others are working to fuse enzymes to Cas9 that can do the 10 others. Other enzyme hitchhikers may make it possible to edit single DNA bases at will, Liu says. Such a base editor could be used to fix single mutations that cause genetic diseases such as cystic fibrosis or muscular dystrophy. It might even correct the mutations that lead to inherited breast cancer.

Rewriting the score

Dead Cas9 is already helping researchers tinker with DNA in ways they couldn’t before. Variations on the dull blade may help scientists solve one of the great mysteries of biology: How does the same set of 20,000 genes give rise to so many different types of cells in the body?

The genome is like a piano, says Jonathan Weissman, a biochemist at the University of California, San Francisco. “You can play a huge variety of different music with only 88 keys by how hard you hit the keys, what keys you mix up and the timing.” By dialing down or turning up the activity of combinations of genes at precise times during development, cells are coaxed into becoming hundreds of different types of body cells.

For the last 20 years, researchers have been learning more about that process by watching when certain genes turn on and off in different cells. Gene activity is controlled by a dizzying variety of proteins known as transcription factors. When and where a transcription factor acts is at least partly determined by chemical tags on DNA and the histone proteins that package it. Those tags are known collectively as epigenetic marks. They work something like the musical score for an orchestra, telling the transcription factor “musicians” which notes to hit and how loudly or softly to play. So far, scientists have only been able to listen to the music. With dead Cas9, researchers can create molecules that will change epigenetic notes at any place in the score, Weissman says, allowing researchers to arrange their own music.

Epigenetic marks are alleged to be involved in addiction, cancer, mental illness, obesity, diabetes and heart disease. Scientists haven’t been able to prove that epigenetic marks are really behind these and other ailments, because they could never go into a cell and change just one mark on one gene to see if it really produced a sour note.

One such epigenetic mark, the attachment of a chemical called an acetyl group to a particular amino acid in a histone protein, is often associated with active genes. But no one could say for sure that the mark was responsible for making those genes active. Charles Gersbach of Duke University and colleagues reported last year in Nature Biotechnology that they had fused dead Cas9 to an enzyme that could make that epigenetic mark. When the researchers placed the epigenetic mark on certain genes, activity of those genes shot up, evidence that the mark really does boost gene activity. With such CRISPR epigenetic editors in hand, researchers may eventually be able to correct errant marks to restore harmony and health.

Weissman’s lab group was one of the first to turn dead Cas9 into a conductor of gene activity. Parking dead Cas9 on a gene is enough to nudge down the volume of some genes’ activity by blocking the proteins that copy DNA into RNA, the researchers found. Fusing a protein that silences genes to dead Cas9 led to even better noise-dampening of targeted genes. The researchers reported in Cell in 2014 that they could reduce gene activity by 90 to 99 percent for some genes using the silencer (which Weissman and colleagues call CRISPRi, for interference). A similar tool, created by fusing proteins that turn on, or activate, genes to dead Cas9 (called CRISPRa, for activator) lets researchers crank up the volume of activity from certain genes. In a separate study, published in July in the Proceedings of the National Academy of Sciences, Weissman and colleagues used their activation scheme to find new genes that make cancer cells resistant to chemotherapy drugs.

RNA revolution

New, refitted Cas9s won’t just make manipulating DNA easier. They also could revolutionize RNA biology. There are already multiple molecular tools for grabbing and cutting RNA, Yeo says. So for his purposes, scissors weren’t necessary or even desirable. The homing ability of CRISPR/Cas9 is what Yeo found appealing.

He started simple, by using a tweaked CRISPR/Cas9 to tag RNAs to see where they go in the cell. Luckily, in 2014, Jennifer Doudna at the University of California, Berkeley — one of the researchers who in 2012 introduced CRISPR/Cas9 — and colleagues reported that Cas9 could latch on to messenger RNA molecules, or mRNAs (copies of the protein-building instructions contained in DNA). In a study published in April in Cell, Doudna, Yeo and colleagues strapped fluorescent proteins to the back of a dead Cas9 and pointed it toward mRNAs from various genes.

With the glowing Cas9, the researchers tracked mRNAs produced from several different genes in living cells. (Previous methods for pinpointing RNA’s location in a cell killed the cell.) In May, Zhang of MIT and colleagues described a two-color RNA-tracking system in Scientific Reports. Yet another group of researchers described a CRISPR rainbow for giving DNA a multicolored glow, also in living cells. That glow allowed the team to pinpoint the locations of up to six genes and see how the three-dimensional structure of chromosomes in the nucleus changes over time, the researchers reported in the May Nature Biotechnology. A team from UC San Francisco reported in January in Nucleic Acids Research that it had tracked multiple genes using combinations of two color tags.

But Yeo wants to do more than watch RNA move around. He envisions bolting a variety of different proteins to Cas9 to manipulate and study the many steps an mRNA goes through between being copied from DNA and having its instructions read to make a protein. Learning more about that multistep process and what other RNAs do in a cell could help researchers understand what goes wrong in some diseases, and maybe learn how to fix the problems.

Zhang wants to improve Cas9, but he would also like other versatile tools. He and colleagues are looking for such tools in bacteria.

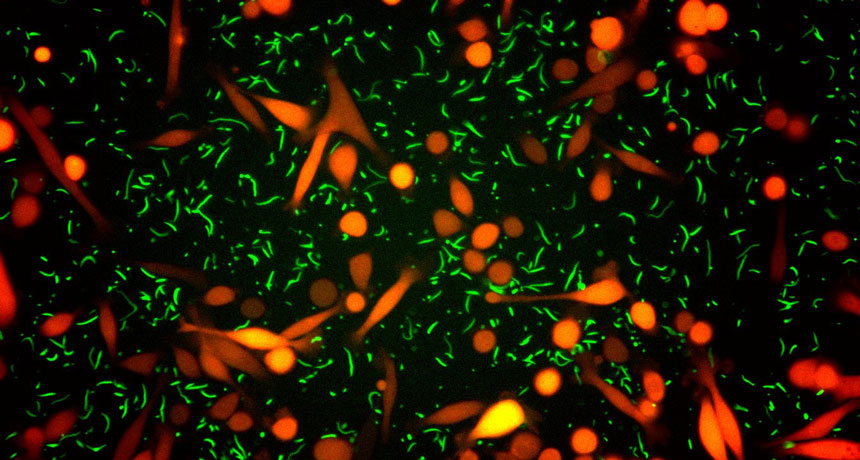

CRISPR/Cas9 was first discovered in bacteria as a rudimentary immune system for fighting off viruses (SN: 12/12/15, p. 16). It zeroes in on and then shreds the viral DNA. Researchers most often use the Cas9 cutting enzyme from Streptococcus pyogenes bacteria.

But almost half of all bacteria have CRISPR immune systems, scientists now know, and many use enzymes other than Cas9. In the bacterium Francisella novicida U112, Zhang and colleagues found a gene-editing enzyme, Cpf1, which does things a little differently than Cas9 does. It has a different “cut here” signal that could make it more suitable than Cas9 for cutting DNA in some cases, the team reported last October in Cell. Cpf1 can also chop one long guide RNA into multiple guides, so researchers may be able to edit several genes at once. And Cpf1 cuts DNA so that one strand of the DNA is slightly longer than the other. That could make it easier to insert new genes into DNA.

Zhang more recently found an enzyme in the bacterium Leptotrichia shahii that could tinker with RNA. The RNA cutting enzyme is called C2c2, he and colleagues reported August 5 in Science. Like Cas9, C2c2 uses a guide RNA to lead the way, but instead of slicing DNA, it chops RNA.

Zhang’s team is exploring other CRISPR/Cas9-style enzymes that could help them “edit or modulate or interact with a genome more efficiently or more effectively,” he says. “Our search is not done yet.”

The explosion of new ways to use CRISPR hasn’t ended. “The field is advancing so rapidly,” says Zhang. “Just looking at how far we have come in the last three and a half years, I think what we’ll see coming in the next few years will just be amazing.”