During the world’s first telephone call in 1876, Alexander Graham Bell summoned his assistant from the other room, stating simply, “Mr. Watson, come here. I want to see you.” In 2017, scientists testing another newfangled type of communication were a bit more eloquent. “It is such a privilege and thrill to witness this historical moment with you all,” said Chunli Bai, president of the Chinese Academy of Sciences in Beijing, during the first intercontinental quantum-secured video call.

The more recent call, between researchers in Austria and China, capped a series of milestones reported in 2017 and made possible by the first quantum communications satellite, Micius, named after an ancient Chinese philosopher (SN: 10/28/17, p. 14).

Created by Chinese researchers and launched in 2016, the satellite is fueling scientists’ dreams of a future safe from hacking of sensitive communiqués. One day, impenetrable quantum cryptography could protect correspondences. A secret string of numbers known as a quantum key could encrypt a credit card number sent over the internet, or encode the data transmitted in a video call, for example. That quantum key would be derived by measuring the properties of quantum particles beamed down from such a satellite. Quantum math proves that any snoops trying to intercept the key would give themselves away.

“Quantum cryptography is a fundamentally new way to give us unconditional security ensured by the laws of quantum physics,” says Chao-Yang Lu, a physicist at the University of Science and Technology of China in Hefei, and a member of the team that developed the satellite.

But until this year, there’s been a sticking point in the technology’s development: Long-distance communication is extremely challenging, Lu says. That’s because quantum particles are delicate beings, easily jostled out of their fragile quantum states. In a typical quantum cryptography scheme, particles of light called photons are sent through the air, where the particles may be absorbed or their properties muddled. The longer the journey, the fewer photons make it through intact, eventually preventing accurate transmissions of quantum keys. So quantum cryptography was possible only across short distances, between nearby cities but not far-flung ones.

With Micius, however, scientists smashed that distance barrier. Long-distance quantum communication became possible because traveling through space, with no atmosphere to stand in the way, is much easier on particles.

In the spacecraft’s first record-breaking accomplishment, reported June 16 in Science, the satellite used onboard lasers to beam down pairs of entangled particles, which have eerily linked properties, to two cities in China, where the particles were captured by telescopes (SN: 8/5/17, p. 14). The quantum link remained intact over a separation of 1,200 kilometers between the two cities — about 10 times farther than ever before. The feat revealed that the strange laws of quantum mechanics, despite their small-scale foundations, still apply over incredibly large distances.

Next, scientists tackled quantum teleportation, a process that transmits the properties of one particle to another particle (SN Online: 7/7/17). Micius teleported photons’ quantum properties 1,400 kilometers from the ground to space — farther than ever before, scientists reported September 7 in Nature. Despite its sci-fi name, teleportation won’t be able to beam Captain Kirk up to the Enterprise. Instead, it might be useful for linking up future quantum computers, making the machines more powerful.

The final piece in Micius’ triumvirate of tricks is quantum key distribution — the technology that made the quantum-encrypted video chat possible. Scientists sent strings of photons from space down to Earth, using a method designed to reveal eavesdroppers, the team reported in the same issue of Nature. By performing this process with a ground station near Vienna, and again with one near Beijing, scientists were able to create keys to secure their quantum teleconference. In a paper published in the Nov. 17 Physical Review Letters, the researchers performed another type of quantum key distribution, using entangled particles to exchange keys between the ground and the satellite.

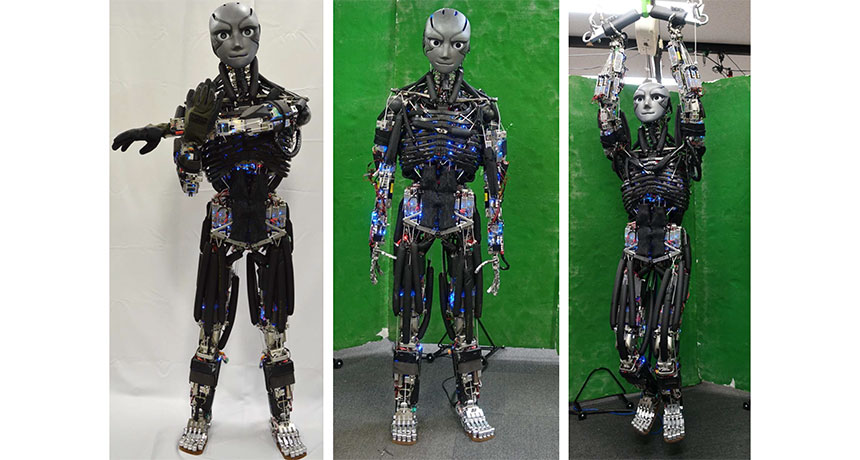

The satellite is “a major development,” says quantum physicist Thomas Jennewein of the University of Waterloo in Canada, who is not involved with Micius. Although quantum communication was already feasible in carefully controlled laboratory environments, the Chinese researchers had to upgrade the technology to function in space. Sensitive instruments were designed to survive fluctuating temperatures and vibrations on the satellite. Meanwhile, the scientists had to scale down their apparatus so it would fit on a satellite. “This has been a grand technical challenge,” Jennewein says.

Eventually, the Chinese team is planning to launch about 10 additional satellites, which would fly in formation to allow for coverage across more areas of the globe.